<

div>

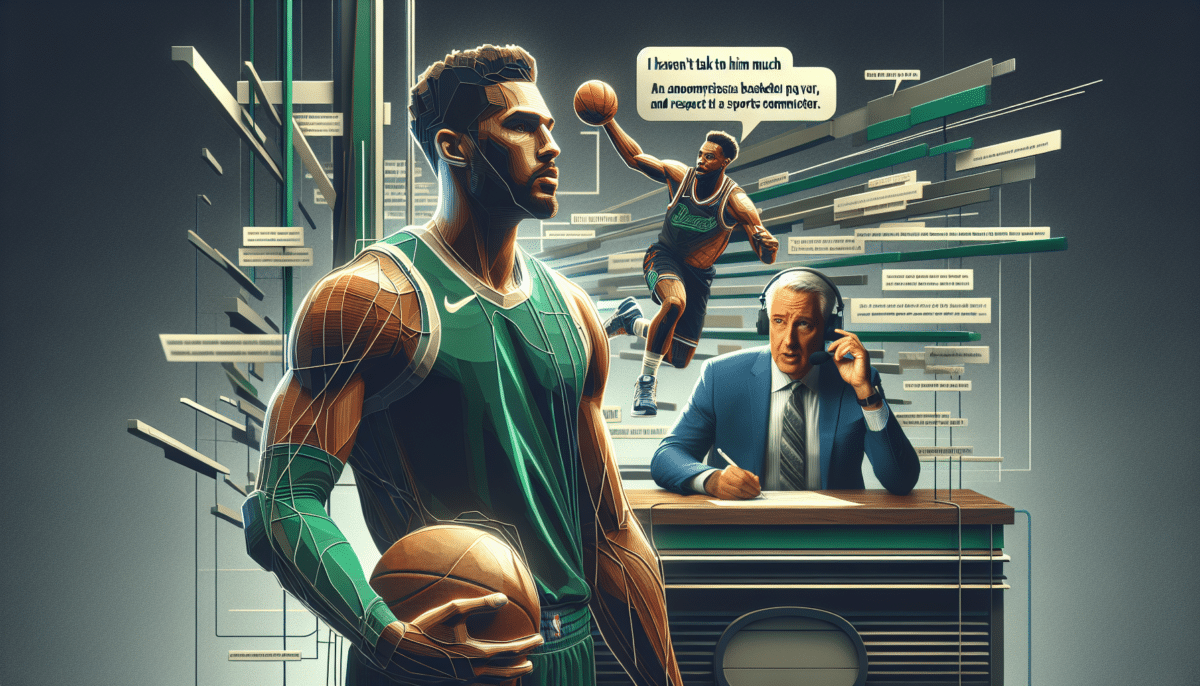

Noise-canceling headphones can create a quiet space for the listener, but allowing select sounds from the environment to come through poses a challenge for researchers. For example, the latest AirPods Pro from Apple adjust sound levels based on the wearer’s activities, like during conversations, but lacks the ability to choose specific voices or control when this filtering occurs.

A team at the University of Washington has designed an AI system, known as “Target Speech Hearing,” that enables a headphone user to focus on a speaker by looking at them for a few seconds to “enroll” them. The system then eliminates all other noises in the background and outputs only the voice of the enrolled speaker in real time, even if the listener moves around in a noisy environment or turns away from the speaker.

The team presented their innovation on May 14 at the ACM CHI Conference on Human Factors in Computing Systems in Honolulu. The source code for the experimental device is accessible for further development. This system is not yet available for commercial use.

<figcaption class="text-darken text-low-up mt-4" itemprop="caption">Credit: University of Washington</figcaption>

“AI is often associated with chatbots for answering questions online,” explained senior author Shyam Gollakota, a professor at the University of Washington’s Paul G. Allen School of Computer Science & Engineering. “However, this project demonstrates AI’s capability to enhance an individual’s auditory perception through headphones, catering to their preferences. With our technology, individuals can now isolate and clearly hear a single speaker, even in noisy surroundings with multiple conversations happening.”

To utilize the system, an individual wearing standard headphones equipped with microphones looks at a speaker and presses a button. Sound waves from the speaker’s voice should reach both microphones on the headset simultaneously within a 16-degree margin of error. The headphones transmit this signal to an embedded computer onboard, where the team’s machine learning software identifies the vocal patterns of the selected speaker. The system then continuously plays back that specific voice to the listener, even as they move around. The system’s ability to focus on the enrolled voice improves as the speaker continues to talk, providing more training data.

<!-- TechX - News - In-article -->

In a test involving 21 subjects, the clarity of the intended speaker's voice was rated almost twice as high as the unfiltered background noise.

This development extends the team’s prior research on “semantic hearing,” which allowed users to choose particular sound categories, like bird sounds or human voices, that they want to hear while blocking out other noises in the environment.

Presently, the Target Speech Hearing (TSH) system can only focus on one speaker at a time and can only enroll a speaker when no loud voice is present from the same direction as the target speaker. If the sound quality is unsatisfactory, the user can re-enroll the speaker to enhance the clarity.

The team is looking to expand the application of the system to earbuds and hearing aids in the future.

Other co-authors on the research paper included Bandhav Veluri, Malek Itani, and Tuochao Chen, doctoral students from the Allen School at the University of Washington, and Takuya Yoshioka, the research director at AssemblyAI.

<div class="article-main__more p-4">

<strong>More information:</strong>

Bandhav Veluri et al, Look Once to Hear: Target Speech HearingThe article titled "Noisy Examples" was presented at the CHI Conference on Human Factors in Computing Systems in 2024. You can find the full text and details at <a target="_blank" href="https://dl.acm.org/doi/10.1145/3613904.3642057" rel="noopener">this link</a>. This content was provided by the <a target="_blank" href="https://techxplore.com/partners/university-of-washington/" rel="noopener">University of Washington</a>.

The researchers at the University of Washington have developed AI headphones that allow the wearer to focus on and listen to a specific individual in a crowded environment by simply looking at them once. This innovative technology offers the potential for enhanced listening experiences in noisy settings.

For more information, you can access the original article here.